AI makes it harder to tell fact from fiction

Advances in generative AI make it easier to create and distribute altered and fake audio and video content.

Generative AI can produce hyper-realistic images, audio and videos to imitate real people saying or doing things that did not happen - these are called deepfakes.

AI software now lets you produce audio to mimic voices of public figures like Presidents and other world leaders for as little as $5.

These tactics are already in use: in January 2024, an AI-generated fake robocall impersonating President Joe Biden was sent to New Hampshire voters in an attempt to reduce voter turnout in the primaries. (See example below)

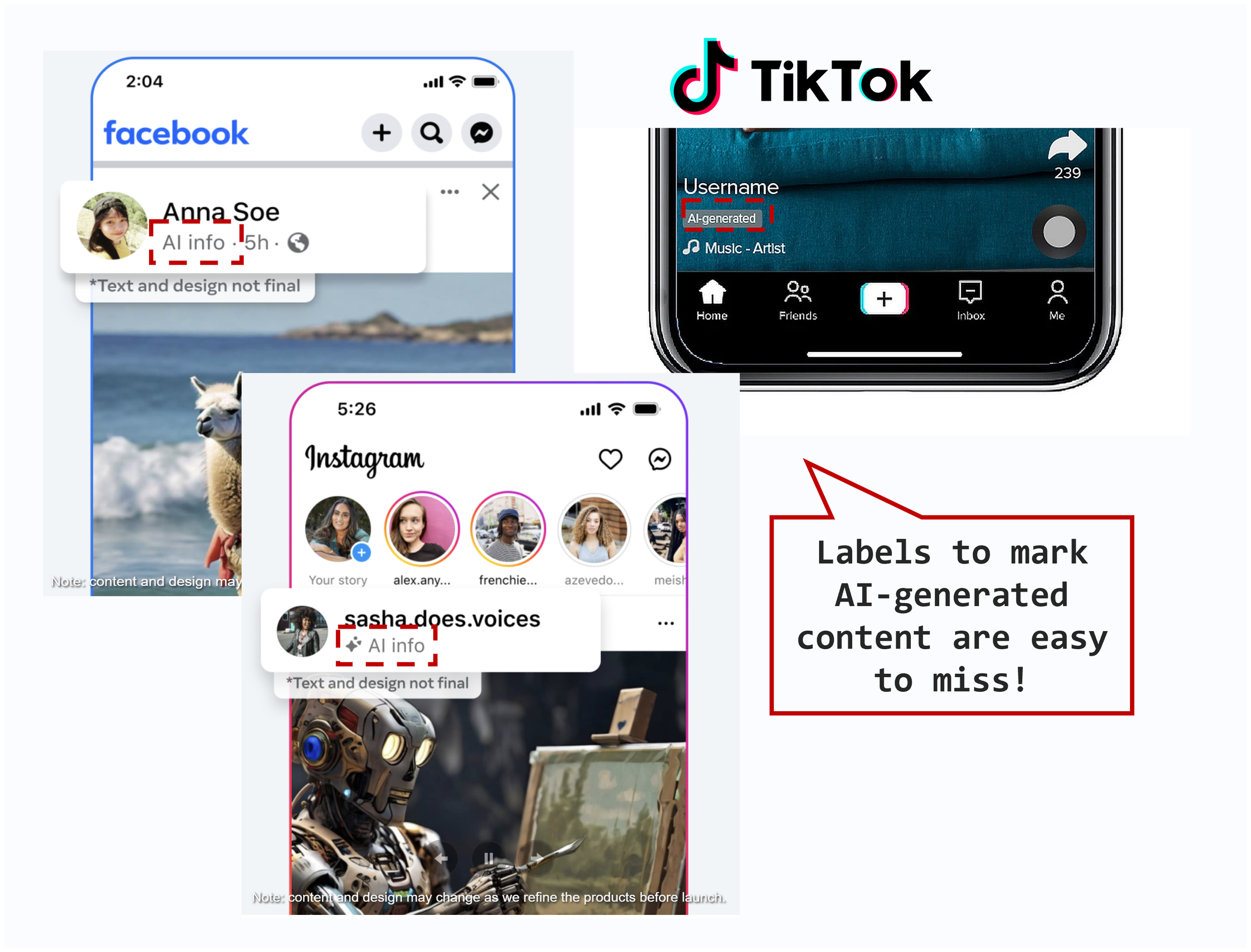

Social media companies are starting to label AI-generated content.

Here’s what some of the labels look like:

Keep in mind:

Not all manipulated or false content will use AI - lots will use manually edited images, or even misrepresent real information, and escape moderation and labeling.

Amidst confusion driven by AI-generated content, some may claim that real content is fake in order to escape accountability. Read more about this “liar’s dividend” in the “Supporting Research” below.

These labels are a helpful guide, but not foolproof!

Be critical of all content and fact-check stories across multiple sources.

More on AI

-

AI-generated fake robocall impersonating Joe Biden

-

Take our quiz to see if you can spot the difference between the real and AI-generated politicians!

-

AI starts to resemble reality: Tells like weird hands, messy hair, and garbled language will become less common over time in AI-generated media - especially where deepfakes are actively used to mislead people.

Fakes take on all forms: Be alert across all messages — text, videos, photos, and especially audio. Fake audio messages can be particularly deceptive as there are less context clues.

Watch out for fakes of less prominent or made-up figures: These are harder to disprove before they spread, compared to major figures like President Biden.

Pay attention to labels - but be critical of all content: AI is a new tool adding to existing techniques to mislead people (e.g., manipulated media, or taking real images out of context)

Beware the liar’s dividend: As AI-generated deepfakes become more common, people may also try to falsely dismiss authentic content as deepfakes.

Stay informed, question, and verify before you trust or share!

Supporting Research

AI can produce hyper-realistic images and convincing ‘deepfakes’, audio or video that is digitally altered to make real people say or do things they did not actually say or do:

- The New York Times published an online test inviting readers to look at 10 images and try to identify which were real and which were generated by AI, demonstrating first-hand the difficulty of differentiating between real and AI-generated images.

- A Rolling Stone article reports on how AI-generated audios of public figures, most notably President Biden, are used in comedic parodies

- In January, a robocall impersonating President Biden went to New Hampshire voters, falsely asserting that a vote in the primary would prevent them from participating in the November general election

Voters should also be wary of the potential for deepfakes to undermine trust in real information:

- The Liar’s Dividend describes how by exploiting the perception that ‘AI is everywhere’, real content could be dismissed as deepfakes or AI-generated, eroding trust in genuine information

Read more on the potential impacts of AI on the election here!